The era of digital farming has brought to the fore copious volumes of agri-data that can be harnessed by the different stakeholders to make the agroecosystem more efficient, productive, and streamlined. Transactions that occur at each stage of the supply chain—be it capturing farm-level data using agtech, securing the required certifications for the commodity, or tracking its movement along the supply chain—generate millions of datasets every minute around the world. Moreover, smart farming devices such as farm management software, drones, sensors, and other IoT devices contribute immensely to the availability of real-time data. Ultimately, there is now a need for a mechanism that consumes all of this data from different sources and delivers information in a way that is logical, organised, and instant. Here is where Apache Kafka® comes into play.

What is Apache Kafka®?

Kafka was conceived by former data engineers at LinkedIn as a messaging queue based on an abstraction of commit logs (or transaction logs) in distributed data systems. Nearly a decade ago when it was made open-source, Kafka had evolved into an optimised messaging system that consumes and processes streaming data generated by multiple data sources in real-time.

Kafka is best known as a fault-tolerant, publish-subscribe messaging system that is fast, durable, and highly scalable, capable of handling trillions of events each day. It has been adopted by at least 80% of all Fortune 100 companies across industries for its speed and remarkable performance. Think of The New York Times, Pinterest, Airbnb, Cisco, Netflix, Spotify, Twitter, or any of the several other Internet-based services that generate unimaginable volumes of real-time data. They all use Kafka to stream the generated data into their respective systems in real-time.

Its three primary functions are to:

- Publish (write) and subscribe to (read) streams of records or events

- Effectively store these streams of events in the same order they were generated

- Process the event streams in real time

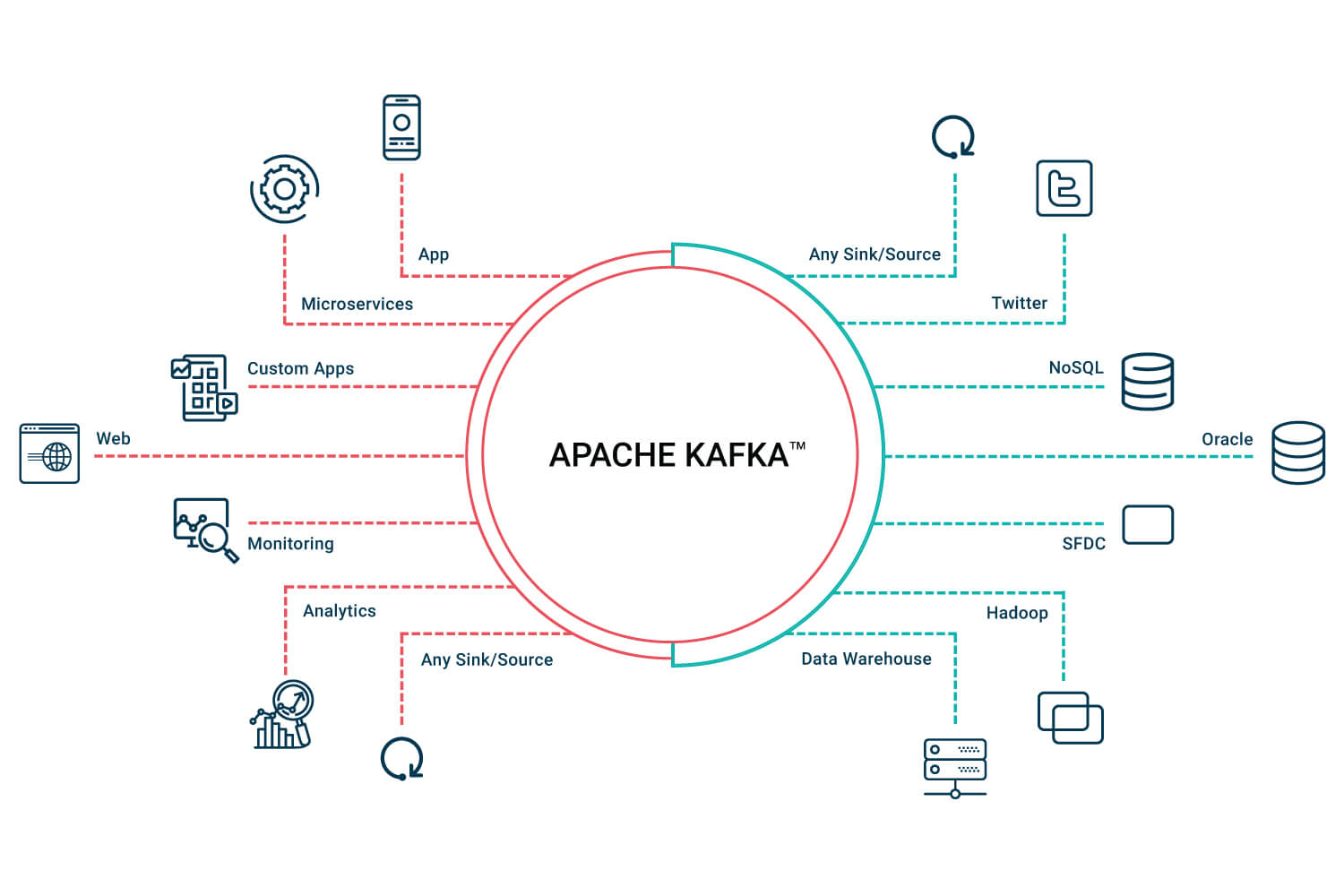

Kafka is typically used to build pipelines for real-time streaming data to process and move data reliably from one system to another or, alternatively, from the consumer to the application that ingests these streams of data. Some of Kafka’s use-cases include tracking website activity, replaying messages, real-time analytics, error recovery, log aggregation, stream processing, ingesting data into Spark or Hadoop, and metrics collection and monitoring.

Image source: Axula

How Is Kafka Powering CropIn?

Apache Kafka® functions as a buffer between data producers and data consumers. It also brings in greater resilience to CropIn’s cloud-native agtech platform by serving as a reliable, low-latency microservices communication bus.

In the upgraded SmartFarm Plus™, it serves as a message broker that relays messages between multiple systems, a job comparable to that of a postman. For instance, when an extension agent or a field officer records a new event in the mobile app, such as adding a new farmer or farm plot, raising an alert from the plot, or harvest collection, Kafka receives this event as a message and pushes this forward to other systems it is integrated with.

If an enterprise opts to integrate CropIn’s platform with an internal IT infrastructure, ERP, or other third-party software using APIs gateways, Kafka will act as the channel that communicates between the two applications, thereby allowing real-time integration with the systems. Further, push notifications can be enabled for events or activities recorded in the SmartFarm Plus™ app as per the user’s preference—a feature previously not included in SmartFarm®. Besides, Kafka’s role as a message broker can be further extended to integrate farm equipment and machinery. This will facilitate the users to schedule an event or task for the machinery to carry out, based on preconfigured conditions or parameters.

CropIn is a global agtech platform that spearheads innovation in digital agriculture. As an ecosystem that is perpetually evolving and pushing the limits of agtech, it affords bigger opportunities for its end-users to achieve more each day. The enhanced version of SmartFarm empowers users with what we call as ‘ThePlusFactor’, enabling them to stay on top of all the ground-level action.

Possibilities are now many with CropIn’s new SmartFarmPlus. Give us a call today to discover more.