AgriTech is transforming farming and food systems at a pace faster than imagined before, and, at CropIn, we are in the habit of leading innovation. We continue to explore new, cutting-edge technologies and digitally empower diverse actors in the agroecosystem to achieve their respective objectives. Considering that CropIn is empowering clients in over 70 countries to maximise their per-acre value, it is critical for us to provide services that are consistent across geographies and deliver an excellent user experience. To achieve this, we recently adopted containerisation and a microservices-based approach to design our applications. So what does that mean?

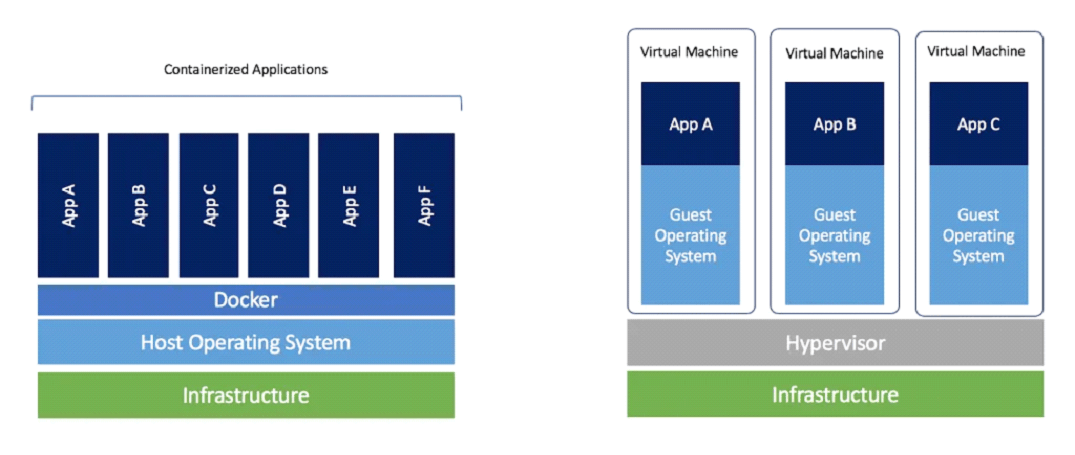

Container technology, a term that was borrowed from the shipping industry for obvious reasons, has revolutionised the way agricilture apps are packaged to enable faster app deployment. It also made the servers more efficient than ever before and guaranteed that the software runs reliably, regardless of the operating system (OS). It eliminated several problems that systems faced earlier, such as the high amount of CPU overhead and resources required to build and run a virtual machine (VM), the limit on the number of applications that can be run efficiently, the incompatibility arising due to OS differences, storage requirements, and the time taken to boot the OS, among several others. Containerisation provided an effective way to handle these problems.

How Does Containerisation Work?

We might have come across at least images of cargo ships carrying uniformly-sized containers that are convenient for cranes to load and unload them anywhere in the world. Much the same way, in the digital space, a container typically includes not only the software but all its other dependencies, such as the configuration files, and binaries and libraries (bins/libs). Containerisation also reduces the size of a single unit considerably, bringing it down from several gigabytes that VMs occupy to only about a few tens of megabytes. In other words, a single container unit with all the runtime components is abstracted from the host OS, thus making it portable and ready to run on any platform or cloud.

Containerisation also makes it possible to break down a full-size (monolith) application to smaller modules referred to as microservices, which communicate with each other through application programming interfaces or APIs. The modularity of the microservices-approach also makes it convenient to develop, deploy, and scale them independently and make isolated changes to only some parts of the application instead of rebuilding the whole. As a result, developers can achieve a shorter turnaround time and improved process efficiency. The other benefit of microservices is that they are lightweight, which makes them available and ready to go almost instantly. Furthermore, microservices are platform and device agnostic, which translates into apps that deliver consistent user experience irrespective of the underlying infrastructure.

Advantages of Containerisation Technology:

- Reliability: A container is a stand-alone unit that logically bundles all the software dependencies and does not depend on the host OS. Hence, moving it from one environment to another becomes hassle-free as it ensures that the application runs as expected when it is transferred from, say, the app developer’s laptop to production, even if both of them are running different operating systems. It also gives almost no room for compatibility issues.

- Fault isolation: The independent nature of containers ensures that any fault in one unit does not affect the operation of the others. The development team can also isolate this container and attend to the issue without the need for unplanned downtime in the other containers.

- Productivity: As a result of reduced bugs that arise from compatibility issues, the app developers and IT operations teams can now devote more time and resources to include additional features or functionalities for the end-users. Moreover, microservices allows the different teams to isolate and work on each container independently, thereby reducing software development time.

- Efficiency: Unlike VMs, each of the containers shares the OS kernel instead of including the entire OS within itself, which adds additional overhead. Containers are hence lightweight and demand fewer resources for their operations compared to VMs.

- Profitability: Cost-wise, while this system brings down the server and licensing costs, packaging the different components together and moving them across platforms also reduces the expenditure considerably.

- Flexibility: Containers can be started and stopped on demand since the OS is already up and running on the server. This speeds up the start-time to a mere few seconds and frees up resources almost instantly when certain functions are no longer required. Even if a container happens to crash, it can be promptly restarted for it to resume its task.

- Immunity: For the reason that containers are isolated from each other, the spread of any invasive and malicious code across containers, and it affecting the host system itself, can be avoided. Besides, by setting specific security permissions, unwanted components can be automatically prevented from penetrating containers.

How Are Containers Managed?

Container technologies, such as Docker, CoreOS rkt, LXC, Mesos, and several others, enable a novel approach for developers to code and deploy application software. However, building complex applications, which comprise several components packaged into innumerable containers, require container orchestration platforms or cluster managers to help make sense of these individual units.

One such container-centric management software is Kubernetes, a software project that was first conceived by engineers at Google. Also called by names such as K8s or Kube, it automates diverse manual processes associated with deploying, managing, and scaling containerised applications. Given that Kubernetes is open source, it comes with very few restrictions on how it can be used, giving organisations the liberty to utilise it unhindered and almost anywhere, be it on-premise or public, private, or hybrid clouds.

Kubernetes is regarded as the ideal platform for hosting cloud-native applications, including CropIn’s, for the simple reason that it facilitates accelerated and elastic scaling, monitoring of resources, easier roll-outs and roll-backs, performing health-checks, and self-healing with functions such as auto-placement, auto-restart, auto-replication, auto-scaling. It is a production-ready, enterprise-grade platform that can be utilised for any architecture deployment.

It works by placing the different containers into groups, and naming them as logical units, and distributing the load amongst them. This now allows for environment-agnostic portability, easy and efficient scaling, and flexible growth.

Some of the tasks that Kubernetes allows an organisation to perform are:

- Orchestrating containers across multiple hosts

- Optimising resource utilisation to run enterprise apps by making the best use of the hardware

- Managing and automating app deployments and updates

- Scaling containerised apps and their resources in an instant

- Declaratively managing microservices to guarantee the continued running of deployed apps as intended

CropIn, Now Powered By Kubernetes

Presently, the microservice architecture is the go-to choice for software development owing to its benefits of rapid and frequent delivery of complex applications, and that too in a reliable manner. While several hyper-growth organisations such as Amazon, Netflix, Uber, SoundCloud, and eBay have adopted microservices, this approach is still in its nascent stages in the agritech industry, and CropIn is one of the early adopters.

Moving our flagship platform to Kubernetes has enabled us to stay cloud-native and also run workloads effectively. It is now possible for us to:

- Perform and update operations as code;

- Automate complex deployments and rollbacks;>

- Deploy smaller, frequent, and reversible alterations with ease;

- Achieve higher resilience, fault tolerance, and isolation in our workloads;

- Scale workloads and capacity on-demand;

- Establish greater observability into the platform resources; and,

- Optimise our cloud infrastructure costs

With microservices and containers, instead of a large chunk of application, we will now have smaller functional modules that will work together to achieve the same results more effectively. This approach helps us reach a scale higher than what was possible previously in the agritech space. Furthermore, an automated CI/CD (Continuous Integration/Continuous Deployment) pipeline will allow us to go from an idea to the production of all our exciting features at a much faster pace with and reduce downtimes to make sure all the services we offer are always available.

The use of microservices ensures that our end-users will get to benefit immensely from an agritech solution that not only caters to their objectives but is also easy to install on any OS and upgraded with more new features at frequent intervals. Our applications are primed to scale the requirements of our rapidly-growing clientele, making sure that the end-users have everything they require, at the right moment, to run their business operations smoothly.

AgriTech is transforming farming and food systems at a pace faster than imagined before and, at CropIn, we are in the habit of leading innovation. Considering that CropIn is empowering clients in over 70 countries to maximise their per-acre value using our suite of products, it is critical for us to provide services that are consistent across geographies and deliver the finest user experience.

During the coming weeks, some of our advanced apps transition from a monolith architecture to one with microservices powered by Kubernetes. How will this affect CropIn’s offerings?

Not just this but there is more happening at CropIn. Stay tuned for updates!